A/B Testing Your Email Campaigns: Optimizing for Success

In this post, we’ll delve into the significance of A/B testing in email campaigns. We’ll explore what A/B testing is, why it’s essential for your email marketing success, and how you can implement it. Aimed at beginners in email marketing, this friendly and engaging piece will provide you with practical insights and advice to optimize your email campaigns through effective A/B testing.

Hello there,

If you’ve been diving into the world of email marketing, you’ve probably heard of A/B testing, also known as split testing. It’s an essential strategy to optimize your email campaigns and drive better results. Let’s explore why and how!

What is A/B Testing and Why is It Important?

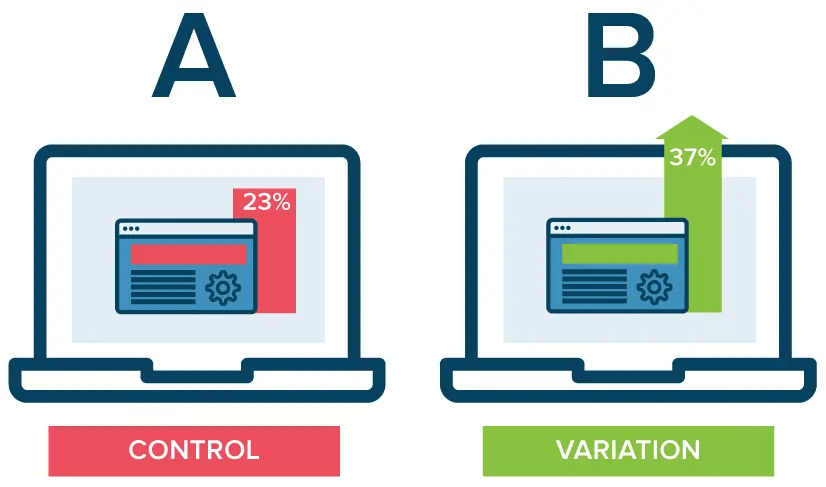

A/B testing involves creating two versions of your email (Version A and Version B), changing one variable between the two, and then sending each version to a portion of your email list to see which performs better. The variable could be anything from the subject line to the email content, images, call-to-action, or the send time.

A/B testing is vital because it allows you to make data-driven decisions about your email campaigns. Instead of guessing what your audience prefers, you can use A/B testing to get clear insights into what works best.

How to Implement A/B Testing

let’s break down each step of implementing A/B testing in email marketing campaigns a bit more and look at how some big brands do this:

1. Decide What to Test:

What you decide to test will depend on what you want to improve in your email campaigns. For instance, if you want to boost your email open rates, you might test different subject lines. If you want to increase click-through rates, you might test different call-to-action (CTA) texts or button colors.

For example, BuzzFeed is known for its unique and catchy subject lines. They often run A/B tests on their subject lines to see what kind of phrasing or headline style their readers prefer.

2. Create Your A/B Test:

After choosing what to test, it’s time to create two versions of your email differing in the chosen variable. Remember, it’s essential to change only one element at a time to accurately determine what led to any differences in performance.

HubSpot provides an excellent example here. They once ran an A/B test on their CTA buttons, creating two versions of the same email but with different CTA button colors.

3. Split Your Email List:

Next, you’ll need to divide your email list. This can be a random split where you send each version to half of your list, or you can segment your list based on certain criteria like demographics, past engagement, or buying behavior.

Etsy, for instance, regularly conducts A/B tests on various customer segments. They might send one email version to new customers and another to returning customers to see how the performance varies between these groups.

4. Send Your Emails and Gather Data:

Once your emails are sent, it’s time to collect and track data. You’ll want to look at key metrics like open rate, click-through rate, and conversion rate. Depending on your email marketing platform, this data should be automatically collected and displayed for you.

Booking.com is an example of a brand that closely tracks and analyzes a multitude of metrics from their A/B tests, from the open rate to more detailed metrics like which links within the email were clicked.

5. Analyze the Results:

After your test has run for a sufficient time, analyze the results. Look at the performance of each version and identify which one achieved the desired results.

Zillow, a real estate company, regularly shares its A/B testing results. For instance, they found that a personalized subject line mentioning the recipient’s city significantly increased the email open rate compared to a generic subject line.

6. Apply Your Learnings:

The final step is arguably the most important. Use the insights you’ve gained from the A/B test to improve your future email campaigns. The goal is continuous improvement and optimization.

For example, Dell used the learnings from an A/B test to optimize their email campaigns significantly. They found that emails with a more straightforward layout and clearer CTAs outperformed those with a more complex design, leading to a 42% increase in clicks.

Remember, A/B testing is a cycle. The goal is to continuously test, learn, and optimize. By doing this, you can continually improve your email campaigns and achieve better results.

Until next time, happy testing!